22/03/2024

Artificial intelligence (AI) has grown from a relatively obscure concept to something that’s quickly changing our lives in just a few short years. According to a recent study, the global AI market will grow 37% annually from 2023 to 2030, creating more than 130 million jobs. Although this rapid proliferation of AI brings excitement and fear in equal measure, depending on who you ask, there’s an elephant in the room—regulation.

AI regulations are inevitable with the advancement and integration of AI systems into virtually every facet of the modern world. Key jurisdictions such as the European Union are already making headwinds in introducing legislative frameworks designed to bring much-needed order to what has been cited as tech’s version of the Wild West, and many more will follow as the need for government-level oversight grows.

The EU AI Act

The EU AI Act is a landmark piece of legislation that aims to create a comprehensive legal framework governing the use and development of AI across all Member States. A vital component of the EU’s broader digital strategy, the EU AI Act reflects a proactive approach to addressing the ethical, societal, and technical challenges posed by rapidly advancing AI technologies.

The Act, passed by European lawmakers on March 13, 2024, aims to foster an environment where AI technologies can thrive, driving innovation and economic growth while ensuring that such developments benefit all citizens and are aligned with the public interest. The Act will also ensure that Europeans can trust what AI offers.

The EU AI Act will:

- Address risks specifically created by AI applications.

- Prohibit AI practices that pose unacceptable risks.

- Determine a list of high-risk applications.

- Set clear requirements for AI systems for high-risk applications.

- Define specific obligations for deployers and providers of high-risk AI applications.

- Put enforcement in place after a given AI system is placed into the market.

- Establish a governance structure at the European and national levels.

- Require a conformity assessment before an AI system is put into service.

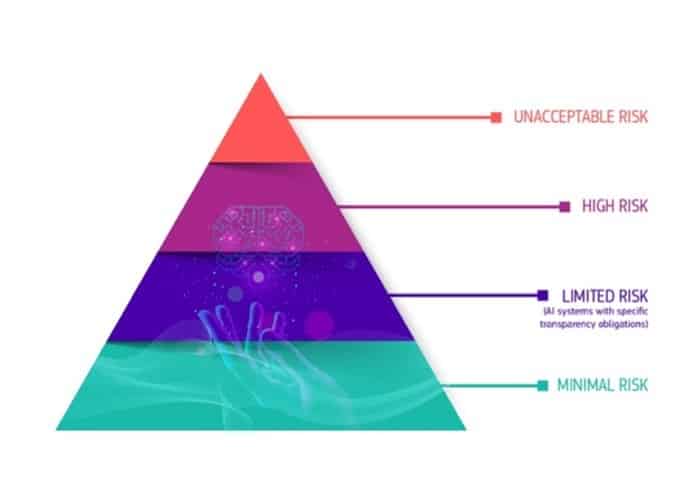

A risk-based approach to AI governance

The regulatory framework established by the AI Act introduces a principle that the higher the risk posed by an AI system, the more stringent the regulations it faces. This approach ensures that AI systems with the potential to significantly impact society or individuals are subject to more rigorous oversight and control.

One of the AI Act’s key aspects is categorising certain AI applications as posing an unacceptable risk, effectively banning their use within the European Union. This category includes AI applications that:

- Manipulate human behaviour to the extent that it can cause harm to individuals. These are systems designed to influence actions or decisions that could harm their well-being or autonomy.

- Facilitate the disadvantageous evaluation of individuals based on their social behaviour or personal characteristics. A prime example is the social credit system implemented in China, which restricts a person’s freedoms and opportunities based on their social status, as determined by their behaviour and other personal traits.

- Enable the real-time remote detection of people in public spaces and their biometric identification without their consent. While such practices are broadly prohibited due to their intrusive nature, the Act specifies exceptions for preventing terrorism or investigating serious crimes, acknowledging the balance between privacy concerns and security needs.

AI systems identified as high-risk will include AI systems used in key areas such as critical infrastructure (e.g., transport), educational or vocational training (e.g., scoring of exams), safety components of products (e.g., AI in robot-assisted surgery) and employment (e.g., CV-sorting software). High-risk AI systems will be subject to strict obligations before they can be put on the market:

- Adequate risk assessment and mitigation systems.

- Logging of activity to ensure traceability of results.

- Clear and adequate information to the deployer.

- Appropriate human oversight measures to minimise risk.

- High level of robustness, security and accuracy.

- Detailed documentation provides all information on the system and its purpose for authorities to assess its compliance.

Meanwhile, AI systems identified as limited risk will be subject to specific transparency obligations to ensure that humans are informed when necessary. When using a chatbot, for example, humans should be made aware that they’re interacting with a machine so that they can make an informed decision about whether to continue using the system. Providers will also have to ensure that AI-generated content is identifiable.

AI systems with minimal risk will have no restrictions and can be used freely. This includes applications such as AI-enabled video games or spam filters.

Who will be affected?

The EU AI Act will primarily affect providers, who are defined broadly to include all legal entities—whether public authorities, institutions, companies, or other bodies—that develop AI systems or commission the development of AI systems. This broad definition ensures that any organisation involved in placing AI technology on the EU market or putting such systems into service within the Union falls under the scope of the Act. The responsibilities of providers under the Act include, but are not limited to:

- Compliance with human rights: Ensuring that their AI systems do not compromise the fundamental rights of individuals, which include privacy protections, non-discrimination, and the safeguarding of personal data.

- Risk management: Conduct thorough risk assessments to identify and mitigate any potential harm their AI systems might pose to individuals or society.

- Transparency measures: Provide clear and understandable information about how their AI systems operate, the logic behind the AI decisions, and the implications of their use.

- Quality and safety standards: Adhering to predefined quality and safety standards that ensure AI systems are reliable, secure, and fit for their intended purpose.

The EU AI Act also addresses the responsibilities of users of AI systems, including legal entities and natural persons. This extends the law’s reach to private individuals, not just companies or organisations.

Under the Act, users of AI systems are expected to:

- Use AI systems responsibly: Follow the manufacturer’s instructions and guidelines for the intended and safe use of AI technologies.

- Monitoring and reporting: Monitor the performance of AI systems in use and report any malfunctions or risks they encounter to the relevant authorities or providers.

- Data governance: Ensure that any data used in conjunction with AI systems is handled in accordance with the EU’s stringent data protection laws, such as GDPR, to protect individuals’ privacy and personal information.

What will the EU AI Act regulate?

The AI Act is a landmark piece of legislation that will address several key areas related to the deployment and operation of AI systems.

Ethics and responsibility

AI systems have the potential to impact society, both positively and negatively significantly. Ethical considerations are paramount, as the decisions made by AI systems can affect people’s lives, livelihoods, and rights.

The AI Act aims to ensure that AI is developed and used ethically, respects human rights, and safeguards individuals’ safety. It emphasises the need for AI systems to be designed with a human-centric approach, prioritising human welfare and ethical standards.

Transparency and accountability

One of the challenges with AI systems, particularly those based on machine learning and deep learning, is their “black box” nature. This opacity can make understanding how decisions are made difficult, leading to concerns about fairness, bias, and discrimination.

The AI Act mandates greater transparency and documentation for AI systems, requiring explanations of how they work, the logic behind their decisions, and the data they use. This is crucial for building accountability and ensuring that AI systems do not perpetuate or exacerbate societal inequalities.

Competitiveness

In the global race for technological advancement, the EU aims to position itself as a leader in ethical AI innovation. By establishing clear, harmonised rules for AI, the AI Act seeks to create a stable and predictable environment that encourages investment and research in AI technologies.

This regulatory clarity is intended to boost the competitiveness of European companies on the international stage, enabling them to innovate while adhering to high ethical and safety standards.

End-user trust

Public acceptance and trust in AI technologies are essential for widespread adoption and success. The AI Act aims to bolster public confidence in AI systems by implementing robust safeguards, ethical standards, and transparency requirements.

Ensuring that AI technologies are used in ways that protect and benefit society is expected to increase citizen trust, which is crucial for integrating AI into various aspects of daily life and the economy.

AI regulations in other jurisdictions

The EU isn’t alone in its efforts to regulate AI—the United States and the United Kingdom have made significant progress in introducing domestic policy.

In California, which is the home of AI leaders like OpenAI, Microsoft, and Google, a bill was recently introduced that aims to establish “clear, predictable, common-sense safety standards for developers of the largest and most powerful AI systems” by taking an approach that narrowly focuses on the companies building the largest-scale models and the possibility that they could cause widespread harm if left unchecked.

Meanwhile, New York City’s Local Law 144, which was introduced in 2021 and applies to employers using AEDTs to evaluate candidates, requires that a bias audit is conducted on automated hiring processes.

Action is also taking place at the federal level. In October, President Biden issued an Executive Order to establish new AI safety and security standards. The Order directs the following actions:

- Developers of the most powerful AI systems must share their safety test results and other critical information with the U.S. government.

- Develop standards, tools, and tests to help ensure that AI systems are safe, secure, and trustworthy.

- Protect against the risks of using AI to engineer dangerous biological materials.

- Protect Americans from AI-enabled fraud and deception by establishing standards and best practices for detecting AI-generated and authenticating official content.

- Establish an advanced cybersecurity program to develop AI tools to find and fix vulnerabilities in critical software.

- Order the development of a National Security Memorandum that directs further actions on AI and security.

Meanwhile, UK legislators are also planning their own rules. The UK Government published its AI White Paper in March 2023, which sets out its proposals for regulating the use of AI in the UK. The White Paper is a continuation of the AI Regulation Policy Paper, which introduced the UK Government’s vision for the future of a “pro-innovation” and “context-specific” AI regulatory regime in the United Kingdom.

The White Paper proposes a different approach to regulating AI than the EU AI Act. Instead of introducing new, broad rules to regulate AI in the UK, the UK Government is looking to set expectations for the development of AI alongside existing regulators, such as the Financial Conduct Authority and empower them to regulate the use of AI within their respective remits.

Interplays between the AI Act and the EU GDPR

When the EU AI Act comes into force, it will be among the world’s first and largest regulations on AI. This has naturally got people thinking about its implications for the General Data Protection Regulation (GDPR), which came into force in 2018.

The AI Act and GDPR differ in their scope of application. The AI Act applies to providers, users, and participants across the AI value chain, whereas the GDPR applies more narrowly to those who process personal data or offer goods or services, including digital services, to data subjects in the EU. AI systems that don’t process personal data will, therefore, not fall under the GDPR.

There are caveats to this, however. One potential gap between the AI Act and the GDPR is the requirement for AI system providers to facilitate human oversight. However, it hasn’t yet been defined which measures should be taken to enable this and to what degree human oversight will be required for specific AI systems. The potential implication is that AI systems will not be deemed partially automated; therefore, Article 22 obligations may apply.

The AI Act also notes that providers of high-risk AI systems might need to process special categories of personal data for bias monitoring and detection. Interestingly, the AI Act does not explicitly provide a lawful basis for doing this, and provisions under the GDPR are a grey area when applied in this context.

There might be a lawful processing ground to avoid biased data and discrimination under the legitimate interest provision under Article 6(1)(f), but when processing special categories of data, an exemption in Article 9(2) must also be met. This means that more than a legitimate interest is needed. While, in theory, system operators could obtain explicit consent from data subjects to process data to eliminate biases, this simply isn’t feasible.

These are two examples of potential sticking points that could come into play between the EU AI Act and the GDPR. Both legal frameworks are complex undertakings with different scopes, definitions, and requirements, which will create challenges for compliance and consistency, which must be addressed.

When does the AI Act come into force?

The AI Act was initially supposed to come into force in 2022, but, as there always is with large legislative undertakings like this, there have been setbacks.

The EU officially approved the AI Act on March 13th, 2024, when European Parliament MEPs voted to adopt it, with a majority of 523 votes in favour and 461 votes against it. The European Council is now expected to formally endorse the final text of the AI Act in April 2024. Following this last formal step and the conclusion of linguistic work on the AI Act, the law will be published in the Official Journal of the EU and enter into force 20 days after publication.

Once the AI Act comes into force, organisations will then have between six and 36 months after the AI Act enters into force to comply with its provisions, depending on the type of AI system that they develop or deploy:

- 6 months for prohibited AI systems;

- 12 months for specific obligations regarding general-purpose AI systems;

- 24 months for most other commitments, including high-risk systems in Annex III; and

- 36 months for obligations related to high-risk systems in Annex II.

As this landmark legislation progresses toward becoming law, companies must understand its implications and prepare for the changes it will bring. This preparation involves several key actions to ensure compliance and leverage the opportunities a well-regulated AI environment can offer.

Firstly, companies should thoroughly review their existing AI systems and applications. This audit should aim to identify any areas where their technology might not comply with the upcoming regulations. Given the AI Act’s focus on risk levels, understanding where each system stands regarding its potential impact on individuals’ rights and safety is vital. Companies might need to modify or discontinue certain AI functionalities that fall into the higher-risk categories or those deemed unacceptable under the Act.

Companies must also focus on implementing robust internal processes that ensure transparency and accountability when using AI. This means establishing transparent documentation practices, ensuring that AI decision-making processes are explainable, and setting up mechanisms for monitoring and reporting on AI’s performance and impact.